Are You Truly Leveraging Your Data for Digital Success?

In today's fast-paced digital landscape, data is the lifeblood of any successful organization. From powering insightful business intelligence (BI) dashboards to fueling advanced analytics models and ensuring stringent compliance, managing and leveraging data effectively is paramount. Yet, many businesses struggle under the weight of scattered, inconsistent, and poorly documented data. This chaos hinders decision-making, breeds inefficiency, and exposes organizations to significant risks.

At NeenOpal, we understand that transforming raw data into a strategic asset requires more than just tools; it demands a structured, comprehensive approach to digital data management and documentation. Drawing insights from best practices outlined in resources like the Data Usage and Documentation Manual, we explore the importance of data management for business leaders, data teams, and IT decision-makers alike.

The Imperative for Structured Data

Why is data management important? Organizations risk misinterpretation, compliance failures, and missed growth opportunities without a structured system. Structured data refers to data that has been organized into a formatted repository, typically a database, where fields are clearly defined and related. This structure is fundamental because it enables machines and humans to understand, process, and analyze data easily. Without structure, data remains a liability rather than an asset.

The Data Usage and Documentation Manual serves as a comprehensive guide designed to ensure consistent data documentation practices across an organization. Its purpose is to promote clarity in data management processes, enhance team collaboration, support data governance, improve data quality, and foster compliance with internal and external regulations. These goals are directly tied to unlocking the full potential of your data.

Effective data usage and documentation are crucial for:

-

- Enhancing collaboration between teams.

- Providing a framework for documenting key data usage standards.

- Supporting data governance.

- Improving data quality.

- Fostering compliance with internal and external regulations.

The manual is intended for a wide range of stakeholders, including Data Engineers, Analysts, Stewards, Compliance and Security Teams, and End Users (like department heads and operational teams) who rely on documented data practices for decision-making. This highlights that data management's importance extends beyond IT concerns to become a cross-functional necessity.

It provides guidelines for critical areas such as Data Collection and Management, Data Governance, Reporting and Sharing, and Compliance with Legal Standards. While it focuses on internal data management and documentation best practices, templates, and workflows, it provides applicable guidelines regardless of the technology used.

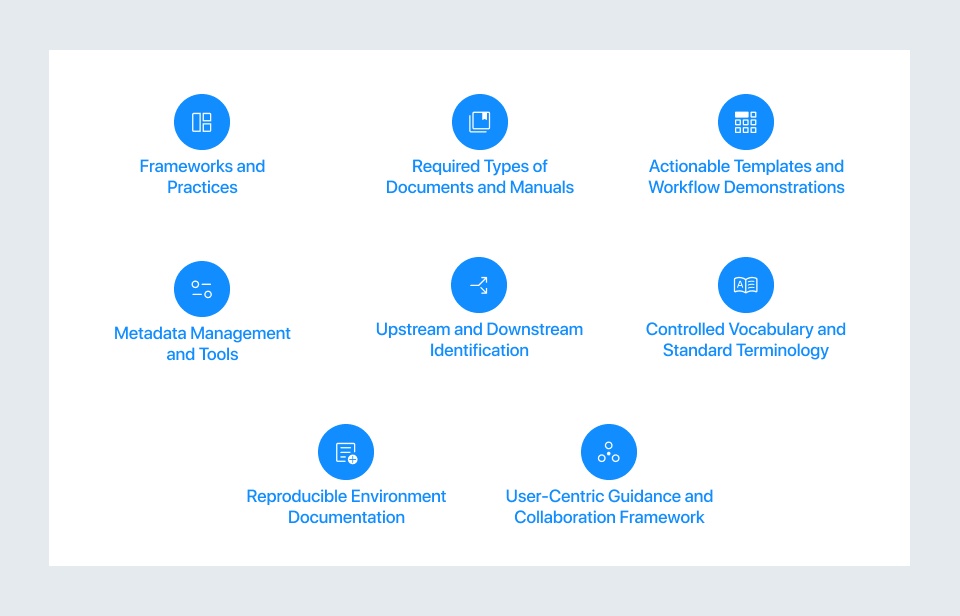

Core Elements of a Robust Data Management Framework

Building a strong data management foundation involves several interconnected core elements. Let's delve into these, drawing from the structure of the Data Usage and Documentation Manual.

1. Frameworks and Practices

Structuring and documenting data management processes ensures clarity, efficiency, and consistency. This involves:

-

-

Process Documentation: Creating detailed descriptions and instructions for every process stage, from data collection to usage. Proper documentation ensures stakeholders understand the steps involved, facilitating smoother operations and better compliance.

- Key practices include using Standard Operating Procedures (SOPs) to ensure consistency and repeatability, Workflow Diagrams to represent data flow visually, and conducting regular Process Reviews and Audits to ensure relevance and compliance.

- Best practices recommend keeping processes simple, repeatable, and clear, utilizing version control to track updates, and engaging relevant stakeholders.

- For example, documenting a data collection process might involve steps like data being collected from internal databases, validated for quality, and then stored in a secure data warehouse.

-

Technical Writing Practices: Crucial for communicating complex data concepts clearly to both technical and non-technical audiences.

- Key practices include prioritizing clarity and simplicity, ensuring consistency in terminology, and providing documentation tailored for non-technical audiences.

- Best practices suggest using the active voice, breaking content into digestible sections with headings, and including a glossary for technical terms.

- An example in technical documentation is endpoint documentation for API usage, describing parameters, formats, and responses, and including example requests/responses.

-

2. Required Types of Documents and Manuals

Effective data management relies on specific types of documentation to track, validate, and govern data and systems.

-

- Functional Validation Document: Evaluates platform functionality post-launch to ensure features meet business and user needs. Key components include Validation Reports and Retrospective Findings, leading to Actionable Improvements. Users include Operations teams and Business Analysts, while Stakeholders include Business Owners and Compliance Teams. Risks of not using this include unidentified issues disrupting operations and missed opportunities for improvement.

- Feature Evolution and Roadmap Document: Tracks existing features and outlines future enhancements, aligning development with user needs and organizational goals. Components involve Feature Tracking, Timeline Management, and a Feedback Loop. Users are the Development and QA teams, and Stakeholders include Marketing teams and Executive Sponsors. Risks include misaligned development priorities and a lack of clarity.

- Business Impact and Integration Document: Evaluates platform contribution to business goals and guides seamless integration. Key components are Impact Assessment, Integration Insights, and Optimization Recommendations. Users are Department heads and IT operations teams, with Executives and Business Partners as Stakeholders. Risks include misaligned functionalities and integration challenges.

- Technical Maintenance and Infrastructure Guide: Guides technical upkeep, ensuring stable operations, troubleshooting, and scaling. Includes Maintenance Procedures, Troubleshooting Playbooks, Scaling and Optimization guidelines, and Data Flow Diagrams (DFDs) showing data movement and component interaction. Users are Support engineers and technical operations teams, with Business leaders and Compliance officers as Stakeholders. Risks involve unaddressed technical issues and inefficient scaling.

- Developer and Integration Toolkit: Provides resources (APIs, SDKs, coding guidelines) for developers to integrate with and extend the platform. Includes API and SDK Documentation, Integration Guidelines, and Code Examples. Users are external and internal developers, with Product managers and clients as Stakeholders. Risks include integration issues and a lack of developer engagement.

- Data Governance and Compliance Manual: Outlines policies and practices for secure and compliant data handling. Key components include Data Access Policies, Compliance Checklists, and Monitoring and Auditing Procedures. Users are Data analysts, Legal teams, and Security personnel, with Regulatory authorities, Clients, and Executives as Stakeholders. Benefits include ensuring compliance and protecting reputation, while risks involve legal/financial repercussions and data breaches.

- Data Pipeline Document: Provides a structured overview of data flow from source (upstream) to target (downstream), detailing ETL/ELT processes, error handling, and recovery. Components include Data Sources (Upstream), ETL/ELT Processes (Extract, Transform, Load), Data Targets (Downstream), Error Handling and Recovery, and Monitoring and Notifications. Users are Data analysts, Developers, and Business intelligence teams, with Business leaders and Compliance officers as Stakeholders. Risks include increased pipeline failures and delays in decision-making.

- Data Dictionary: A centralized repository describing data elements' structure, format, and meaning. Key components include Data Fields (with names, types, constraints), Dataset Relationships, and Data Validation Rules. Users are Data analysts and developers, with Data Governance Teams as Stakeholders. Benefits include enhanced data discoverability and reduced misinterpretation, while risks include data inconsistencies and miscommunication.

3. Actionable Templates and Workflow Demonstrations

Standardized templates and workflows provide structure and step-by-step guidance for data processes.

-

- Templates: Examples include the Data Integration Plan Template (outlining data source integration), Data Quality Checklist Template (ensuring data meets standards), and Data Flow Design Template (visually mapping data movement).

- Workflows: Examples include the Data Integration Workflow (guiding data consolidation), Data Issue Resolution Workflow (handling errors and inconsistencies), and API Usage Workflow (standardizing API interaction).

- Visual Aids: The Data Flow Diagram (DFD) illustrates data movement and interaction within the system, helping users understand data flow and improving system design. The Entity Relationship Diagram (ERD) visually represents the data model and relationships between entities, providing a clear data structure and aiding database design.

4. Metadata Management and Tools

Effective cataloging and metadata management make data discoverable, understandable, and usable. A Data Catalog acts as a repository for metadata, enhancing searchability, tracking data lineage, and supporting governance. Metadata itself is information describing data (technical, business, operational).

Effective cataloging and metadata management improve Data Discovery, enhance Data Understanding, ensure Data Governance, and increase Operational Efficiency. Examples like the AWS Glue Data Catalog illustrate how tools provide centralized metadata storage, automatic schema discovery, data lineage tracking, and integration with cloud services. This is particularly beneficial for organizations leveraging cloud-native solutions.

5. Upstream and Downstream Identification

Understanding the flow of data is crucial.

-

- Upstream Data Sources: These are where data originates (e.g., transactional data, external APIs, unstructured data, real-time streams). Identifying these sources ensures accurate, high-quality data extraction and manages dependencies. They supply the raw data for the Extract phase of ETL.

- Downstream Data Consumers: These systems or processes consume data after transformation and loading (e.g., Data Warehouses/Data Lakes, Business Intelligence Tools, Machine Learning Models, APIs, External Systems). Understanding consumer needs helps optimize data structure and accessibility.

A well-managed data pipeline with clear identification of upstream and downstream systems ensures a smooth flow, improves data quality, and aligns the system with business needs. This is vital for both real-time and batch data processing.

6. Controlled Vocabulary and Standard Terminology

Using a consistent set of terms across documentation ensures clear communication. It avoids confusion. Maintaining a Controlled Vocabulary and Standard Terminology, supported by a glossary and style guide, improves collaboration and reduces errors.

7. Reproducible Environment Documentation

Ensuring data workflows are consistently replicable across systems and that users maintain data integrity and transparency. This involves documenting Environment Specifications (hardware, software, dependencies, leveraging tools like GitHub for version control and Docker for containerization), Data Setup and Dependencies (datasets, sources, structures), Automation of Environment Setup (providing scripts), and documenting Code and Configuration Files (also version-controlled). Test and Validation Procedures should also be outlined.

8. User-Centric Guidance and Collaboration Framework

Data users need clear guidance and fostered collaboration. This includes:

-

- User-Focused Documentation: Simple, role-specific documentation with real-world examples.

- Collaboration Tools and Platforms: Using tools for centralized communication and collaborative workspaces.

- Access Control and Role-Based Collaboration: Defining clear access rights based on roles (leveraging tools like AWS IAM or Azure AD) and facilitating shared data access.

- Feedback Loops and Continuous Improvement: Regularly collect feedback and update documentation.

- Data Governance and Ownership: Assigning data stewardship and involving stakeholders in data quality maintenance.

- Data Ethics and Responsible Use: Ensuring data handling follows ethical guidelines (privacy, fairness, transparency) and promoting ongoing discussions.

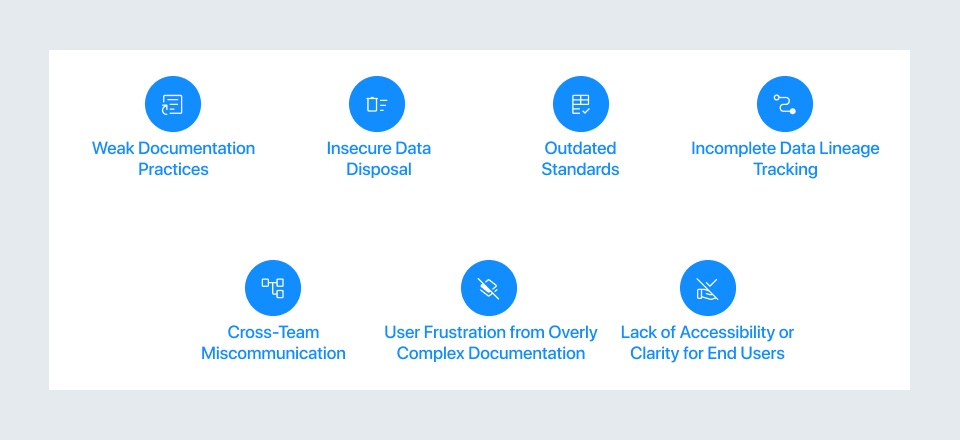

Avoiding the Pitfalls

Neglecting structured data management and documentation introduces significant risks, or "Cautions" as highlighted in the source. These include:

-

- Weak Documentation Practices: Leading to confusion and errors from incomplete or unclear documents.

- Insecure Data Disposal: Making sensitive data vulnerable to unauthorized access.

- Outdated Standards: Causing inefficiencies and risks when documentation doesn't reflect current technologies or regulations.

- Incomplete Data Lineage Tracking: Making it difficult to trace the source of errors and ensure data reliability.

- Cross-Team Miscommunication: Resulting from unclear or inconsistent documentation and a lack of communication channels.

- User Frustration from Overly Complex Documentation: Hindering effective data use when documentation is too technical or complicated.

- Lack of Accessibility or Clarity for End Users: When users cannot easily find or understand documentation, this can lead to mistakes and inefficiencies.

Implementing the core elements discussed above provides a roadmap to avoid these common and costly challenges and supports long-term data management and digital transformation initiatives.

Conclusion

For business leaders, data teams, and IT decision-makers, structured data management is not merely a technical detail but a strategic imperative. It underpins reliable business intelligence, empowers sophisticated analytics, and ensures crucial compliance with regulatory standards. By embracing comprehensive data documentation practices, defining clear frameworks and workflows, leveraging metadata management, and fostering a user-centric, collaborative environment, organizations can reap the benefits of data management across efficiency, governance, and innovation.

Ignoring these practices leads to risks such as data inconsistencies, operational inefficiencies, and missed opportunities. Conversely, investing in structured data management ensures data quality, integrity, and security, fosters trust, and aligns data initiatives with overarching business goals. Incorporating data digitalization strategies alongside cloud-native solutions can further enhance scalability and efficiency in implementing these practices.

Ready to transform your data into a powerful engine for growth and compliance?

At NeenOpal, we specialize in helping organizations like yours implement robust data management frameworks that drive digital transformation. Our expertise in data consulting spans the entire data lifecycle, from strategy and governance to implementation and optimization.

Contact NeenOpal today for a personalized consultation to explore how our data management solutions can empower your business intelligence, analytics, and compliance initiatives.

Visit our website to learn more about NeenOpal's Data Management Services.

Frequently Asked Questions

1. Why is data management important in the digital age?

Data management is essential because it ensures accuracy, consistency, and security across business operations. In today’s digital landscape, structured data management enables organizations to make data-driven decisions, improve compliance with regulations, reduce inefficiencies, and unlock the full potential of business intelligence and advanced analytics.

2. What are the key benefits of structured data management for businesses?

The main benefits of structured data management include better decision-making, enhanced data quality, improved compliance, stronger collaboration across teams, and greater scalability in digital transformation initiatives. By organizing data into structured repositories like databases or data warehouses, businesses reduce risks, eliminate redundancies, and ensure reliable insights for long-term growth.

3. How does a Data Usage and Documentation Manual improve digital data management?

A Data Usage and Documentation Manual provides standardized guidelines for collecting, documenting, and governing data. It ensures consistency, promotes cross-team collaboration, enhances data governance, and supports compliance with internal and external regulations. This structured approach helps organizations avoid data misinterpretation, improve trust in analytics, and scale their digital transformation strategies effectively.