Are You Losing Opportunities Because of Poor Data Quality?

In today's data-driven world, information is the lifeblood of every organization. Accurate, reliable data is paramount for powering critical business intelligence (BI) dashboards, driving advanced analytics initiatives, and ensuring regulatory compliance. However, many businesses grapple with the pervasive challenge of poor data quality and management. This isn't just an IT problem; it has significant financial, operational, compliance, and reputation repercussions.

At NeenOpal, we understand that achieving successful digital transformation and deriving true value from your data assets hinges on a strong data quality foundation. Drawing on insights from industry best practices, this guide explores the critical elements of a comprehensive Data Quality Management Plan and how implementing one can transform your business operations and decision-making.

The Alarming Cost of Bad Data

Poor data quality impacts far beyond minor inconveniences. Inaccurate, incomplete, or inconsistent data can lead to serious business consequences. According to Gartner, poor data quality costs organizations an average of $12.9 million annually.

Let's break down the different ways bad data can hurt your business:

-

- Financial Risk: Errors in decision-making caused by insufficient data can result in misallocated resources, unprofitable investments, and operational inefficiencies. The cost also includes the expense of fixing mistakes. Bad data can lead to missed sales opportunities and wasted marketing efforts.

- Operational Risk: Poor data hampers productivity, as employees waste time dealing with data issues. This leads to delays, inefficiencies, and bottlenecks, impacting overall business performance.

- Compliance Risk: Inaccurate data can cause organizations to fail compliance checks with regulations like tax laws, financial reporting rules, or data privacy laws such as GDPR, potentially leading to significant penalties.

- Reputational Risk: Bad data erodes customer trust and brand loyalty, resulting in disengaged customers and lost sales. Public data errors can damage an organization's reputation, negatively affecting customer acquisition and retention.

Insufficient data can also lead businesses to target the wrong decision-makers, wasting resources and reducing returns on investment. This can frustrate customers and cause internal/external loss of trust.

What is Data Quality Management?

The core purpose of a Data Quality Management Plan is to define the processes, strategies, and best practices needed to ensure data integrity, accuracy, and consistency throughout its lifecycle. By effectively managing data quality, an organization can improve decision-making, enhance operational efficiency, and ensure compliance with legal and regulatory standards. This plan establishes guidelines to ensure data is accurate, complete, reliable, and timely, ultimately supporting the organization's data-driven objectives.

Data Quality Management applies to all data collected, processed, stored, and used across any business function, including Operational Data Management, Customer Relationship Management (CRM), Financial Systems, Supply Chain Management, and Product Development and Design. The plan covers data validation, cleansing, transformation, monitoring, and reporting within data pipelines, operational systems, and reporting environments.

Key aspects of data quality focus on:

-

- Completeness: Is all required data present? [10, see image]

- Accuracy: How well does the data reflect reality? [10, see image]

- Timeliness: Is the data up to date? [10, see image]

- Consistency: Is the data consistent across different systems and formats? [10, see image]

- Validity: Is the data valid according to predefined rules? [10, see image]

- Uniqueness: Are all entries unique where required? [10, see image]

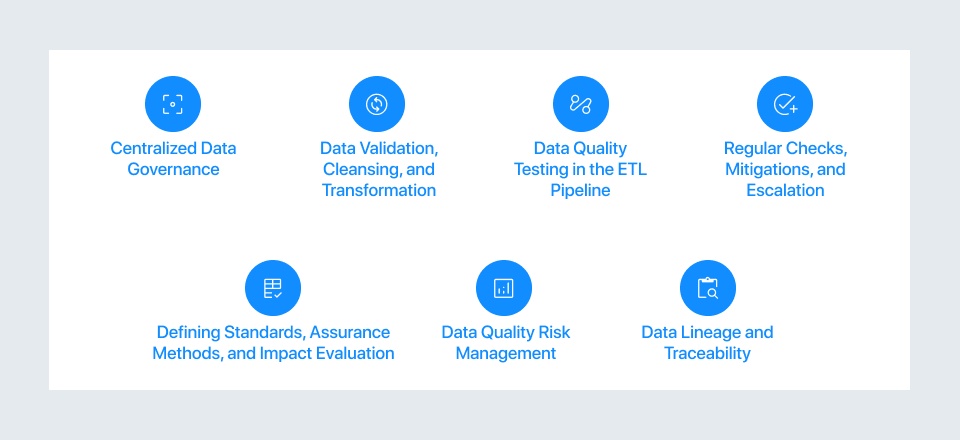

Core Elements of a Robust Data Quality Management Plan

Ensuring high data quality requires a structured approach, encompassing several key elements.

Ensuring high data quality requires a structured approach, encompassing several key elements.

1. Centralized Data Governance

A centralized governance framework is fundamental for managing and accessing data in a standardized manner. This involves:

- Implementing a unified data repository to consolidate information, eliminating redundancy and inconsistencies.

- Defining clear data ownership and stewardship roles to ensure accountability for maintaining data quality.

- Developing standardized data formats and naming conventions for seamless integration across systems and teams.

2. Data Validation, Cleansing, and Transformation

These three processes ensure data is accurate, consistent, and useful for analysis and decision-making.

-

Validation: Ensures data entering the system is correct, complete, and relevant. Techniques include:

- Data Type Validation: Checking that data matches the expected type (e.g., ensuring an age field only accepts numbers).

- Constraint Validation: Ensuring data fits within specified requirements (e.g., password complexity).

- Format Validation: Checking that data matches a specified format (e.g., MM/DD/YYYY for dates).

- Range Validation: Verifying that data values fall within a realistic or defined range.

- Consistency Validation: Ensuring logical consistency across records or systems (e.g., ship date is after order date).

- Uniqueness Validation: Ensuring values like IDs or email addresses are unique.

- Referential Integrity Validation: Checking that relationships between data in different tables are valid.

- Freshness Validation: Confirming data is up-to-date, especially for analytics or data warehousing.

- User Acceptance Testing (UAT): Validating the system's compliance with business requirements through real-world testing.

-

Cleansing: Identifying and correcting or removing inaccurate, incomplete, or irrelevant data. Techniques include:

- Removing Duplicates: Identifying and merging duplicate records.

- Standardizing Data: Ensuring data adheres to a consistent format (e.g., address formats).

- Handling Missing Values: Address data gaps using methods like Mean/Median/Mode Imputation, KNN, Time-Series Techniques, MICE, or Machine Learning Models. The choice depends on the dataset's complexity.

- Outlier Detection and Treatment: Identifying data points that significantly differ from others using techniques like Z-Score, IQR, Visual Inspection, Isolation Forest, or DBSCAN. The appropriate technique varies based on dataset complexity.

-

Transformation: Changing the format, structure, or values of data to make it more suitable for analysis. Techniques include:

- Data Aggregation: Combining records for summarized information (e.g., total monthly revenue).

- Normalization: Scaling data to a specific range (e.g., 0 to 1) for standardization.

- Pivoting and Unpivoting: Transforming data between wide and long formats for analysis or reporting.

- Joining Data: Combining data from multiple sources based on a common key.

- Data Filtering: Extracting relevant data subsets using conditions.

- Data Enrichment: Adding external data to enhance the dataset.

- Data Encoding: Converting categorical variables into numerical formats for machine learning.

- Feature Engineering: Creating new variables from existing data to improve predictive models.

Inconsistent or unvalidated entries often stem from unstandardized data capture. The NeenOpal case study on structured data entry at scale showcases how guided forms, dropdown-based validation, and real-time error logging drastically improved accuracy and user accountability, echoing our recommendations for centralized governance and validation workflows. Best practices for these processes include automating validation and cleansing using tools, regularly monitoring data quality metrics via dashboards and alerts, and involving stakeholders.

3. Data Quality Testing in the ETL Pipeline

Testing data quality at various stages of the Extract, Transform, Load (ETL) pipeline is crucial. Different testing strategies can be implemented:

-

- Unit Tests: Validate individual components, such as SQL queries or transformation logic, using dummy data. Tools like Pytest can be used.

- Component Tests: Validate data schema and integrity at the component level, ensuring adherence to business rules. Tools include Great Expectations and Data Build Tool (DBT).

- Flow Tests: Ensure data completeness, accuracy, and consistency throughout pipelines, preventing loss or duplication. Great Expectations and Airflow are useful here.

- Source Tests: Validate correct data ingestion from source systems without truncation or loss, maintaining structure and freshness. Great Expectations and AWS Glue can assist.

- Functional Tests: Verify integration across system components like APIs or dashboards. Pytest and Amazon QuickSight are examples.

- Load Testing: Evaluate system performance under heavy data volumes. The Pytest-benchmark plugin can be used.

- End-to-End Testing (E2E): Simulate the entire data flow across components to ensure seamless interaction and fulfillment of business requirements. Pytest and Great Expectations are relevant tools.

- Integration Testing: Verify proper interaction between components or systems, ensuring data flows as expected. Pytest with Requests and Great Expectations can be used.

- Data Quality Matrix: Assess data using predefined quality metrics (e.g., uniqueness, standard deviation). Great Expectations offers rule-based validation and monitoring.

Automating data validation is key to efficiency and consistency. This can be achieved by leveraging ETL tools like AWS Glue, using data validation frameworks like Great Expectations, writing custom scripts, integrating validations into CI/CD pipelines (e.g., using GitHub Actions), and implementing real-time validation for streaming data with tools like Apache Kafka or AWS Kinesis. Additionally, managing infrastructure using Infrastructure as Code (IaC) tools like AWS CloudFormation ensures consistent provisioning, reducing errors, and enhancing collaboration.

4. Regular Checks, Mitigations, and Escalation

Ongoing data quality requires scheduled reviews, automated alerts, and a transparent process for addressing issues.

-

- Scheduled Reviews: Perform weekly or monthly checks on key metrics like completeness, accuracy, and timeliness using dashboards and reports.

- Alert Systems: Configure automated alerts to notify teams in real-time about outliers, schema mismatches, or missing data, triggering immediate investigation.

- Root Cause Analysis (RCA): When issues arise, analyze error logs and trace data flow to identify the source of discrepancies. The Saff Squeeze technique is mentioned as a method to narrow down potential causes systematically.

- Performance and Error Handling: Monitor data pipelines for processing times and throughput, implement retries for failed processes, and log errors for analysis.

- Backup and Recovery: Create data backups regularly (daily incremental, weekly full) and periodically test the recovery process.

- Data Quality Escalation Process: Define a clear process for notifying the data quality team, escalating unresolved issues based on priority, and documenting the resolution steps.

5. Defining Standards, Assurance Methods, and Impact Evaluation

Establishing data quality standards provides the criteria for ensuring data accuracy, reliability, and usefulness. Relevant frameworks include ISO 8000, DAMA-DMBOK, and Six Sigma for Data Management. The ISO/IEC 25012: Data Quality Model classifies attributes into inherent (accuracy, consistency, completeness) and system-dependent (accessibility, portability, security) data quality.

Assurance methods help organizations maintain data accuracy and minimize errors. These include:

-

- Audits and Reviews

- Data Profiling

- Validation Rules

- Standardized Data Entry, training, and templates

- Real-time Monitoring of metrics

- Feedback Loops from stakeholders to foster continuous improvement

Evaluating the impact of data quality initiatives measures effectiveness and identifies areas for improvement. Methods include assessing performance metrics (efficiency, accuracy, error reduction), conducting cost-benefit analysis, collecting stakeholder feedback, measuring risk mitigation effectiveness, performing comparative outcomes analysis, and monitoring long-term sustainability benefits.

6. Data Quality Risk Management

Identifying potential risks early is key to proactive intervention. Common issues include inaccuracies, inconsistencies, missing values, outdated information, duplicate data, and unsynchronized data.

Risk mitigation strategies involve proactive monitoring and contingency planning.

-

- Proactive Monitoring: Using AI/ML tools for real-time anomaly detection, tracking health metrics on centralized dashboards, setting up regular audits, and automated alerts. Best practices include defining KPIs, automating anomaly detection, and using predictive analytics.

- Contingency Planning: Preparing action plans to rapidly resolve risks like system outages or data breaches, simulating failure scenarios, and testing backup/recovery processes. Best practices include off-site backups, forming a disaster recovery team, and regularly updating plans.

A structured Risk Assessment Framework evaluates the severity and likelihood of data quality risks. Components include:

-

- Risk Scoring: Assigning scores based on potential impact and likelihood to prioritize risks.

- Scenario Analysis: Simulating potential risks to evaluate their effects on processes and outcomes.

- Response Planning: Developing tiered strategies tailored to risk severity.

- Performance Reviews: Regularly assessing the effectiveness of risk management measures.

7. Data Lineage and Traceability

Understanding where data comes from, how it's transformed, and where it's used is crucial for trust, analysis, and compliance.

-

- Data Lineage Documentation: Capturing the data's origin, transformations (cleaning, merging, enriching), and final destinations (dashboards, reports, systems).

- Visualization Tools: Simplifying understanding through flow diagrams, dependency mapping, and interactive analysis.

- Traceability: Ensuring every data point can be traced back to its origin (upstream traceability) for authenticity verification and issue resolution, and understanding its use downstream for impact assessment and error correction.

Centralized architecture isn't enough without traceability. In building a robust Snowflake data warehouse, NeenOpal implemented comprehensive log management, role-based access controls, and immutable records, reinforcing our emphasis on lineage, audit readiness, and secure data stewardship.

Leveraging Cloud-Native Solutions

Modern cloud platforms offer robust tools that significantly support data quality management efforts. For instance, AWS services like AWS Glue facilitate data cataloging, validation, and cleansing, while SAP on AWS can manage consistent data across departments. Apache Kafka and AWS Kinesis enable real-time data integration and validation, and AWS IoT Core or Azure IoT Hub can track data from sensors in real time. Data visualization tools like Power BI or Tableau help monitor and analyze data quality metrics. Azure Data Factory and AWS Glue provide data integration and ETL capabilities for consolidation and de-duplication, while Azure Purview and AWS Glue Data Catalog help enforce data governance policies, lineage, and timeliness. Microsoft Dynamics 365 offers integrated ERP functions for managing data like supplier records. These cloud-native solutions provide scalability, automation, and integrated features that are essential for effective DQM.

Continuous Improvement: An Ongoing Commitment

Maintaining data quality is not a one-time project but an ongoing process. Vigilance is required to address challenges like data overload and human error. Regularly monitoring KPIs, leveraging advanced analytics, and fostering a culture of data stewardship across the organization are vital to identifying and addressing issues promptly. Neglecting these practices can lead to compromised decision-making and operational inefficiencies.

Conclusion

In conclusion, a robust Data Quality Management Plan is essential for organizations aiming to achieve reliable business intelligence, effective analytics, and stringent compliance. Businesses can unlock the full potential of their data assets by implementing a well-defined data quality management process and focusing on key areas such as data governance, systematic validation, cleansing, and transformation, continuous monitoring, risk management, and clear data lineage. Incorporating strong data quality control measures and leveraging modern cloud-native solutions empowers organizations to build a foundation of trusted data, leading to improved decision-making, increased efficiency, and enhanced competitive advantage.

Is your organization struggling with data quality issues? Are you looking to establish or refine your data quality management strategy?

Discover how NeenOpal's expertise in data consulting and digital transformation can help you build a solid data foundation. Contact us today for a consultation or explore our comprehensive data management solutions!

Frequently Asked Questions

1. What is data quality, and why does it matter for businesses?

Data quality refers to the accuracy, completeness, consistency, and reliability of data across an organization. High-quality data is critical for business intelligence, regulatory compliance, and advanced analytics. Poor data quality leads to financial losses, compliance risks, and operational inefficiencies.

2. How can companies improve data quality management?

Organizations can improve data quality by implementing a structured Data Quality Management (DQM) plan that includes validation, cleansing, transformation, and monitoring processes. Leveraging modern tools such as AWS Glue, Azure Purview, and Great Expectations helps automate quality checks, reduce errors, and ensure data accuracy across systems.

3. What are the biggest risks of poor data quality for enterprises?

Bad data can cause financial losses through misinformed decisions, operational inefficiencies, compliance failures with regulations like GDPR, and reputational damage that erodes customer trust.